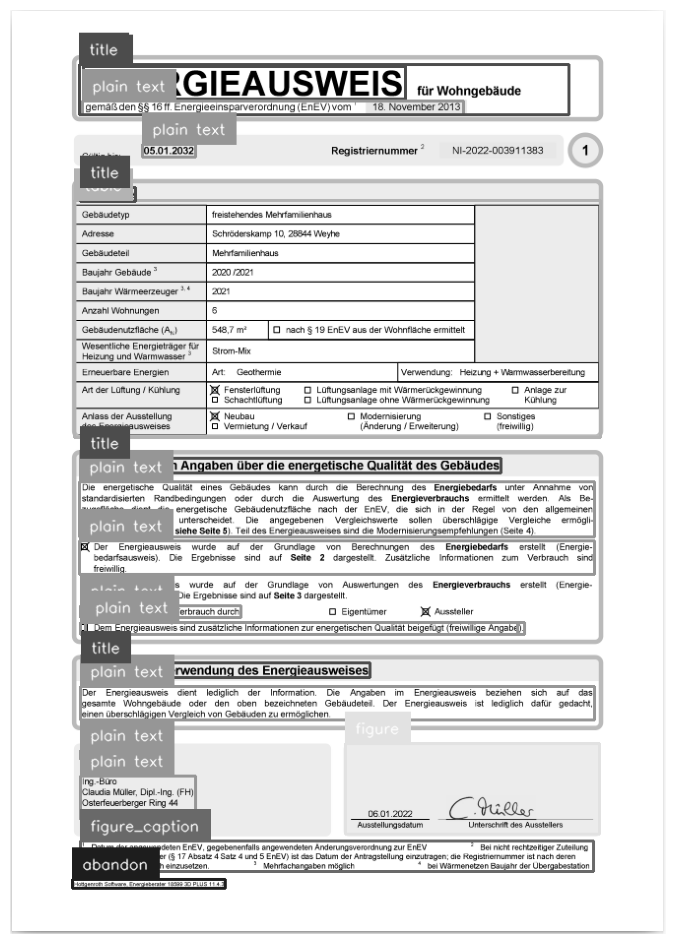

Detections(xyxy=array([[ 71.123146, 687.8052 , 593.94336 , 721.8409 ],

[ 70.97421 , 479.21695 , 593.3649 , 535.53595 ],

[ 71.24948 , 455.39197 , 498.4701 , 470.55954 ],

[ 70.65032 , 539.8029 , 593.3954 , 573.8178 ],

[ 72.065994, 663.6691 , 365.0041 , 678.9194 ],

[ 65.575966, 193.63667 , 599.0595 , 434.21326 ],

[ 63.092915, 883.88824 , 218.96974 , 892.8909 ],

[ 71.35639 , 778.1166 , 188.13594 , 822.87744 ],

[ 71.89993 , 605.70557 , 234.40808 , 617.51855 ],

[ 71.382614, 622.5114 , 522.1956 , 634.0853 ],

[340.11487 , 745.31305 , 598.5524 , 837.3176 ],

[ 74.81994 , 57.23144 , 401.61005 , 90.07859 ],

[ 71.9376 , 844.9032 , 592.02576 , 875.8439 ],

[ 73.877815, 92.0042 , 460.47784 , 106.27179 ],

[ 71.9376 , 844.9032 , 592.02576 , 875.8439 ],

[ 70.16747 , 55.55576 , 567.40125 , 106.37981 ],

[ 71.25632 , 752.0478 , 110.301605, 761.9804 ],

[ 76.37275 , 622.15454 , 515.76794 , 634.3203 ],

[ 71.25632 , 752.0478 , 110.301605, 761.9804 ],

[134.07968 , 136.17201 , 188.30263 , 149.25388 ],

[ 71.85338 , 180.87312 , 127.31638 , 194.0283 ]], dtype=float32), mask=None, confidence=array([0.9691066 , 0.96723694, 0.90364605, 0.9008068 , 0.89508706,

0.8684531 , 0.8472473 , 0.8348766 , 0.75021255, 0.68077874,

0.5642477 , 0.5191322 , 0.45062026, 0.4251406 , 0.42104587,

0.3793083 , 0.37325826, 0.3463163 , 0.29789737, 0.2091472 ,

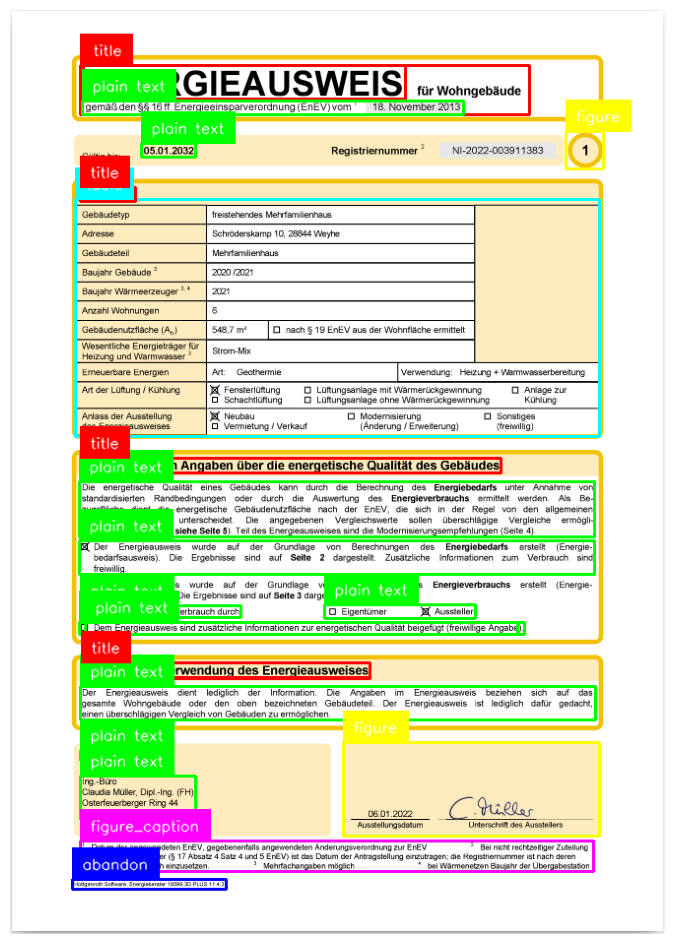

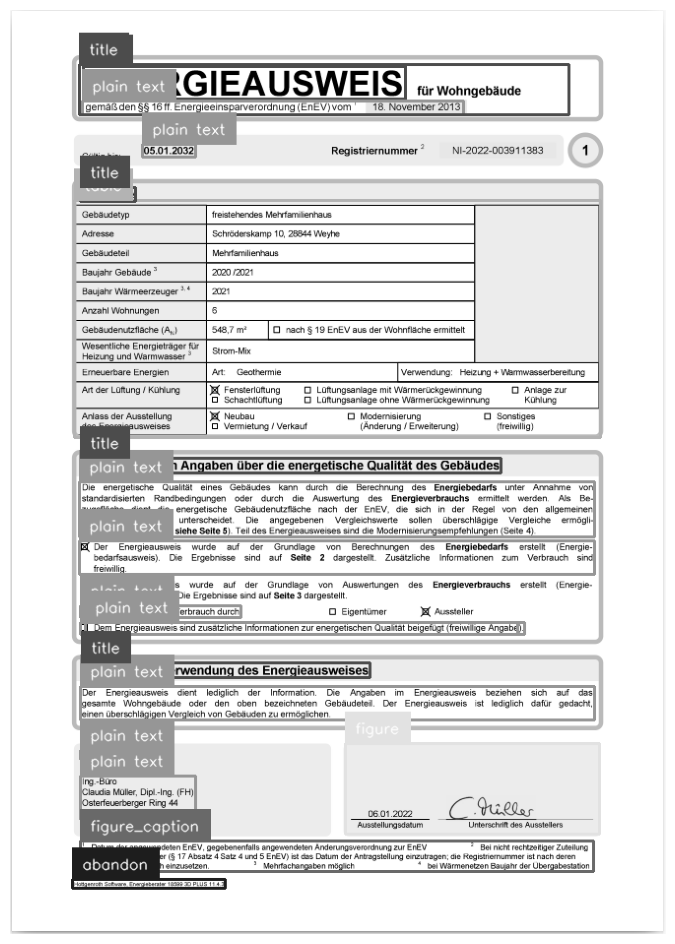

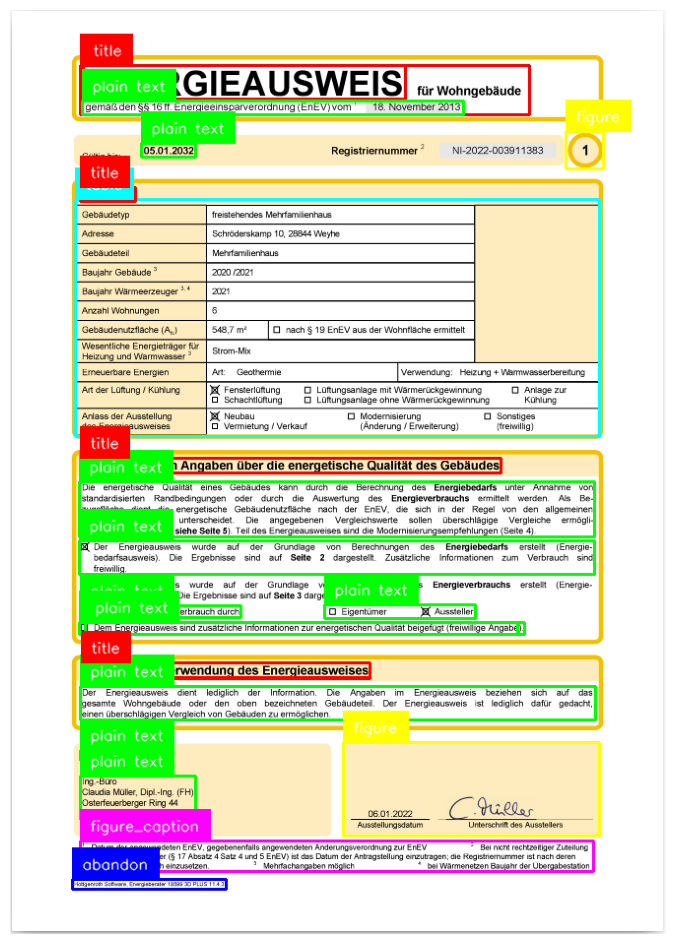

0.2041068 ], dtype=float32), class_id=array([1, 1, 0, 1, 0, 5, 2, 1, 1, 1, 3, 0, 2, 1, 4, 0, 0, 1, 1, 1, 0]), tracker_id=None, data={'class_name': array(['plain text', 'plain text', 'title', 'plain text', 'title',

'table', 'abandon', 'plain text', 'plain text', 'plain text',

'figure', 'title', 'abandon', 'plain text', 'figure_caption',

'title', 'title', 'plain text', 'plain text', 'plain text',

'title'], dtype='<U14')}, metadata={})